Intel Core Ultra Series 3

Scalable performance and AI acceleration

for embedded systems

COM-HPC and COM Express modules with Intel Core Ultra Series 3 processors

Accelerating Edge AI workloads for high-performance computing

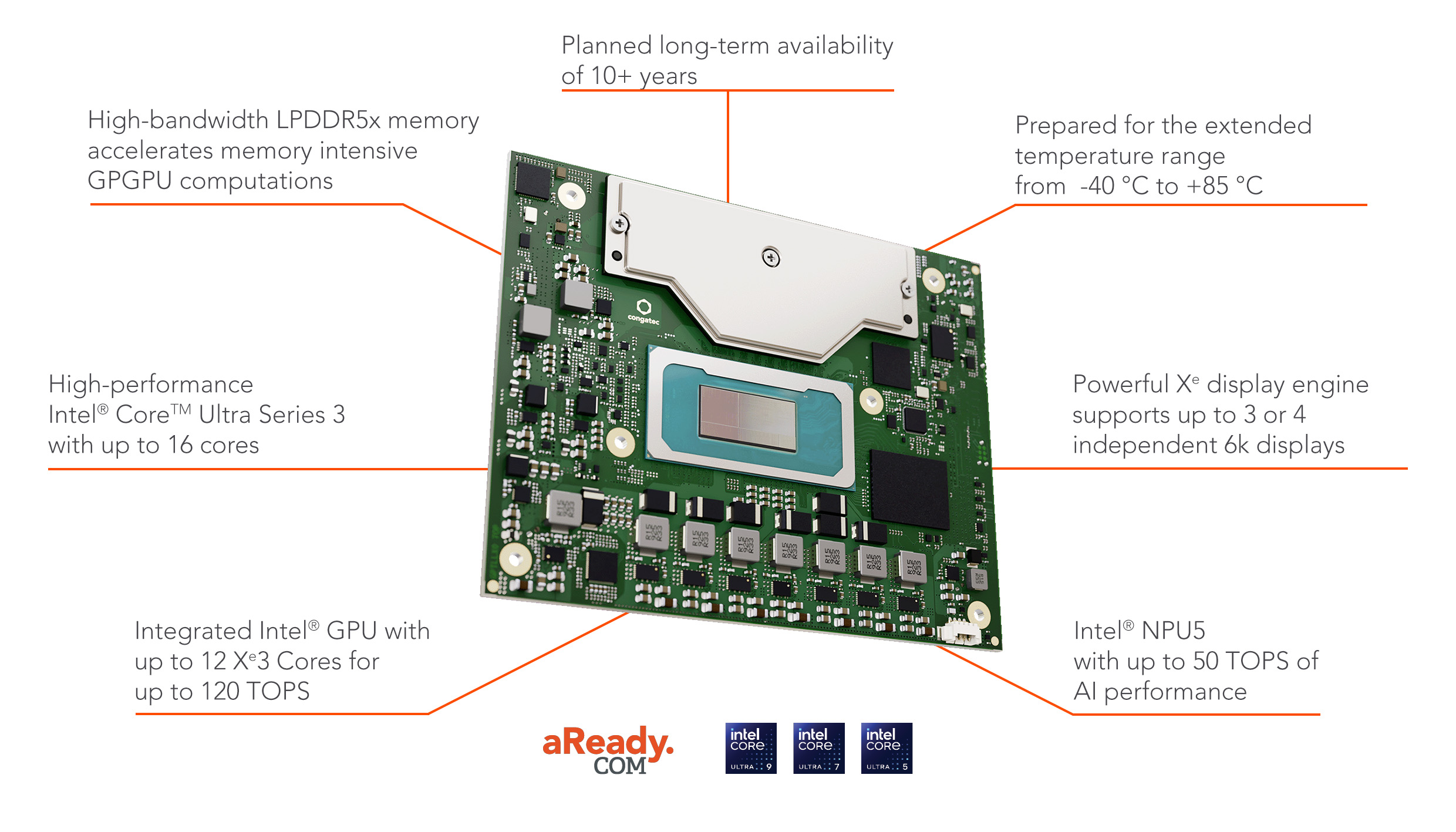

Intel® Core™ Ultra Series 3 processors (code name Panther Lake) offer a powerful, efficient performance for edge AI workloads. Delivering up to 16 CPU cores, Intel Xe3 Graphics combined with a NPU5 for integrated AI acceleration, this SoC delivers up to 180 TOPS of AI performance. Moreover, the 3rd generation of the Intel Core Ultra processors offers an extended availability of ten years for embedded designs.

Robust, Efficient Edge AI Performance

Delivering up to 180 TOPS of AI performance, Intel Core Ultra Series 3 processors are an excellent choice for AI-enabled embedded applications in multiple markets, including industrial automation, robotics, healthcare, point-of-sales (POS), as well as smart city and transportation.

Built on Intel’s groundbreaking 18A process technology, this new processor generation marks a significant gain in performance and computing density over previous processor series. The CPU features 4 P-cores (code name Cougar Cove), up to 8 E-cores, and 4 LP E-Cores (both code named Darkmont) delivering up to 10 TOPS, with an integrated NPU5 that enables low-power AI inference with up to 50 TOPS. For more parallel processing workloads, the integrated GPU with up to 12 Xe3 cores can deliver up to 120 TOPS – eliminating the need for discrete AI accelerator cards like GPGPUs, saving space, power, and cost.

With Intel® Xe Matrix Extensions (Intel® XMX) and OpenVINO, developers can even realize self-optimizing local machine learning that does not require a cloud connection to a server infrastructure to learn.

From SWaP-C to High-End Designs

Intel® Core™ Ultra Series 3 processors represent a significant advance in x86 technology performance and efficiency. They enable a wide range of edge AI workloads, including computer vision, local natural language processing (NLP), large language model (LLM) execution, simultaneous localization, and mapping (SLAM), and more.

Featuring a wide power envelope ranging from 15 W to 65 W. Intel Core Ultra series 3 based embedded computing platforms allow to scale from smallest embedded formfactors like COM-HPC mini (95 mm x 70 mm) or COM Express Type 10 (84 mm x 55 mm), COM Express compact (95 mm x 95 mm) up to workstation grade performance on COM-HPC Client Size A (95 mm x 120 mm) with highest bandwidth I/Os.

By offering powerful edge AI processing on an energy-efficient SoC providing AI performance where most applications do not require discrete AI accelerator cards, embedded designers can easily realize size, weight, power, and cost (SWaP-C) optimized designs.

Facts, Features & Benefits

| Facts | Features | Benefits |

|---|---|---|

| Integrated AI acceleration | In addition to 8 or 16 CPU cores, the SoC includes an integrated NPU5 and up to 12 Xe3 GPU cores. | Able to handle many edge AI workloads without discrete accelerator cards, enabling engineers to meet strict space, power, and cost (SWaP-C) requirements more easily. |

| Extended temperature range | Prepared for the extended temperature range from -40 °C to +85 °C. | Enables mission critical applications to run most reliably in extreme environments from arctic cold to desert heat. Simplifies system designs for applications in markets such as in-vehicle, aerospace, energy, robotics, railway, automation, and many others. |

| Built on Intel® 18A process | Intel® 18A process features RibbonFET transistors and PowerVia backside power architecture. | 18A improves speed, density, and power efficiency of the processor architecture — delivering high-performance x86 computing with an excellent performance per watt ratio and high heterogeneous computing density. |

| Ample I/O and connectivity | Up to 20x PCIe lanes including 8x PCIe gen 4 and up to 12x PCIe gen 5 and Thunderbolt 4. | Facilitates design for applications with high I/O and connectivity requirements, such as complex sensor fusion systems used in autonomous navigation solutions. |

| Fast memory | Intel Core Ultra Series 3 processor based modules support LPDDR5x memory soldered down or with LPCAMM modules | Data-intensive applications and GPGPU AI functions are benefitting from a high memory bandwidth and capacity. Use cases like natural language processing (NLP), the local operation of large language models (LLM), and image classification for AI-accelerated embedded vision with sensor fusion for medical imaging, and SLAM in autonomous vehicles, can be significantly accelerated by improved RAM. |

| Different TDP ranges available | Support for TDP ranges from 15 W to 65 W. | Provides excellent overall design flexibility and enables fanless designs for reliable rugged and fully sealed systems for mission critical applications. |

Typical application areas

Medical Diagnostic Devices

On-device AI can enhance patient care by providing better medical imaging and laboratory data analysis, improving diagnostic accuracy, and speeding clinical workflows.

Industrial Automation

On the factory floor, edge AI can be used for predictive maintenance, defect inspection, and process control — boosting efficiency and productivity while reducing network latency and data backhaul costs by performing AI inferencing locally.

Retail Point-of-sale

In retail, edge AI enables automated self-checkout kiosks that use camera and sensor data to identify products and validate customer scans in real time. The result is fast, accurate barcodeless scanning and better detection of fraudulent activity to curb retail shrinkage.

Video Surveillance Systems

Intel® Core™ Ultra Series 3 processors deliver powerful graphics and inference performance for large, complex models, including GenAI, by offering industrial-grade durability for smart surveillance systems.

Ai-enabled Self-Checkout Terminals

Intel® Core™ Ultra Series 3 processors deliver exceptional performance alongside built-in GPUs that help reduce infrastructure costs. Advanced integrated connectivity also helps keep devices up to date while driving upstream analytics.

Smart Grid

In smart grid applications, Intel® Core™ Ultra Series 3 processors enable real-time control, secure communication, and intelligent energy management across distributed generation and grid control systems.